Difference between revisions of "Speech synthesizers"

(summary) |

(added concatenation, formant, and articulatory synthesis sections) |

||

| (12 intermediate revisions by the same user not shown) | |||

| Line 3: | Line 3: | ||

These applications require speech that is intelligible and natural-sounding. Today speech synthesis systems achieve great deal of naturalness compare to a real human voice. Yet they are still perceived as non-human because minor audible glitches still remain present in the outputted utterances. It may very well be that modern speech synthesis has reached the point in the Uncanny Valley where the closeness of the artificial speech is so near perfection that humans find it unnatural. | These applications require speech that is intelligible and natural-sounding. Today speech synthesis systems achieve great deal of naturalness compare to a real human voice. Yet they are still perceived as non-human because minor audible glitches still remain present in the outputted utterances. It may very well be that modern speech synthesis has reached the point in the Uncanny Valley where the closeness of the artificial speech is so near perfection that humans find it unnatural. | ||

| − | == | + | === Historical overview === |

| − | + | [[File:Vonkempelen.gif|thumbnail|right|Schematics of von Kempelen's pneumatic speech synthesizer]] | |

| + | The history of speech synthesis date back to the 18th century when Hungarian civil servant and inventor Wolfgan von Kempelen created a machine of pipes and elbows and assorted parts of musical instruments. He achieved a sufficient imitation of the human vocal tract with the third iteration. He published a comprehensive description of the design in his book entitled The Mechanism of Human Speech, with a Description of a Speaking Machine in 1791. | ||

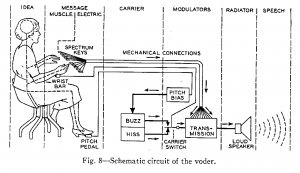

| + | [[File:Voder schematic.png|thumbnail|right|Voder developed by Bell Laboratories ]] | ||

| + | The design was picked up by Sir Charles Wheatstone, a Victorian Era English inventor, who improved on the Kemplelen's design. The newly sparked interest into the research of phonetics and the Whetstone's work inspired Alexander Graham Bell to do his own research into the matter and eventually arrive at the idea of the telephone. Bell laboratories created the Voice Operating Demonstrator, or Voder for short, a human speech synthesizer in the 1930s. Voder was a model of a human vocal tract, basically an early version of a formant synthesizer, and required a trained operator who had to manually create utterances using a console with fifteen touch-sensitive keys and a pedal. | ||

| + | |||

| + | Digital speech synthesizer begun to emerge in the 1980s, following the MIT-developed DECtalk text-to-speech synthesizer. This synthesizer was notably used by the physicist Stephen Hawking. His website claims that he uses it even today. | ||

| + | |||

| + | In the beginnings of the modern day speech synthesis, two main approaches appeared. [[Formant synthesis]] that tries to model the entire vocal tract of a human, and [[articulatory synthesis]] which aims to create the sounds speech is made of from scratch. However, both methods are gradually replaced by [[concatenation synthesis]] nowadays. This form of synthesis uses a large set, speech corpus, of high-quality pre-recorded audio samples. These samples can be assembled together and form a new utterance. | ||

| + | <!-->== Main characteristics == | ||

| + | Speech synthesizers create synthetic speech by various ways. They are used to [[Articulatory synthesis|mimic how the human vocal tract works]] and how the air passes through it, they are used to [[Formant synthesis|manipulate sounds to create the basic building blocks of speech]], or they [[Concatenation synthesis|assemble new utterances from a large database]] of pre-recorded audio samples.</--> | ||

=== Purpose === | === Purpose === | ||

| − | |||

| − | == | + | The purpose of speech synthesis is to model, research, and create synthetic speech for applications where communicating information via text is undesirable or cumbersome. It is used in 'giving voice' to virtual assistants and in text-to-speech, most notably as a speech aid for visually impaired people or for those who lost their own voice. |

| − | + | ||

| − | + | == Concatenation synthesis == | |

| − | == | + | [[File:Concatenation synthesis 1.png|thumbnail|right|Unit selection concatenation speech synthesis]] |

| − | + | Concatenation synthesis is a form of [[speech synthesis]] that uses corresponding short samples of previously recorded speech to construct new utterances. These samples vary in length, ranging between 1 second to several milliseconds. These small samples are subsequently modified so they better fit together according to the type, structure, and mainly the contents of the synthesized text. (ref Tihelka dis. práce) These samples, or units, can be individual phones, diphones, morphemes, or even entire phrases. So instead of changing the characteristics of the sound to generate intelligible speech, concatenation synthesis works with a large set of pre-recorded data from which, much like a building set, builds utterances that originally were not present in the data set.<ref>http://www.cs.cmu.edu/~awb/papers/IEEE2002/allthetime/allthetime.html</ref> | |

| + | |||

| + | The usage of real recorded sounds allows the production of very high quality artificial speech. This is advantageous in comparison to [[Formant synthesis]] as we do not require approximate models of the entire vocal tract. However, some speech characteristics, such as voice character, or mood can not be modified as easily. (ref Tihelka 10) A unit placed in a not very ideal position can result in audible glitches and unnaturally sounding speech because the contours of the unit do not perfectly fit together with the surrounding units. This problem is minimal in domain specific synthesizers but general models require large data sets and additional output control that detects and repairs these glitches.(ref Taylor 425) | ||

| + | |||

| + | First, a data set of high quality audio samples needs to be recorded and divided into units. The most common type of a unit is the diphone, but other larger or smaller units can be also used. The set of such units creates the speech corpus. Diphones inventory can be artificially created,(Taylor 425) or based on many hours of recorded speech produced by one speaker.(Tihelka 17)(Taylor 426) These being either spoken monotonously, as to create units with neutral prosody, or spoken naturally. The corpus is then segmented into discrete units. Segments such as silence or breath-ins are also marked and saved. | ||

| + | |||

| + | The speech corpus prepared in this way is then used for construction of new utterances. A large database, or in other words, a large set of units with varied prosodic and sound characteristics, should safeguard more natural-sounding speech. In Unit selection synthesis, one of the approaches Concatenation synthesis uses, the target utterance is first predicted, suitable units are picked from the speech corpus, assembled, and their suitability towards the target utterance tested.(ref. Clark & King) | ||

| + | |||

| + | == Formant synthesis == | ||

| + | |||

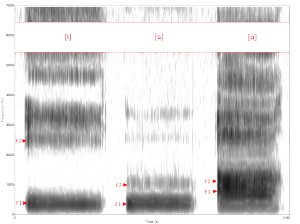

| + | [[File:Spectrogram -iua-.png|thumbnail|right|Spectrogram of American English vowels [i, u, ɑ] showing the formants F1 and F2]] | ||

| + | Formant synthesis is the first truly crystallized method of synthesizing speech. It used to be the dominant method of speech synthesis until the 1980s. Some call it a synthesis "from scratch" or "synthesis by rule". (ref Paul Taylor p 422) In contrast to the more recent approach of concatenation synthesis, formant synthesis does not use sampled audio. Instead, the sound is created from scratch. This results in a rather unnatural sounding artificial voice but allows application where the usage of audio samples-based synthesis would be unfeasible. | ||

| + | |||

| + | The result is intelligible, clear sounding speech, but listeners immediately notice that is was not spoken by a human as the geenrated speech sounds "robotic" or "hollow". The model is too simplistic and does not accurately reflect the subtleties of a real human vocal tract which consists of many different articulation working together. | ||

| + | |||

| + | Formant synthesis uses modular, model-based approach to creating the artificial speech. Synthesizer utilizing this method rely on an observable and controllable model of an acoustic tube. This model typically has a layout of a vocal tract with two system functioning parallel. The sound is generated from a source and then fed to a vocal tract model. The vocal tract is modelled so the oral and nasal cavities are separate and parallel but only passes through one of them depending on the type of (i.e. nasalized sounds) sounds needed at the moment. Both cavities are combined on the output and pass through a radiation component that simulates the sound characteristics of real lips and nose. | ||

| + | |||

| + | TODO: Add block diagram of the basic formant synthesizer | ||

| − | + | However, this method does not represent an accurate model of the vocal tract because it allows separate and independent control over every aspect of the individual formants. But in a real vocal tract, the formant is constructed as a whole by the entire system. It is not possible to highlight one part of it and base an artificial model on it. (ibid 399) | |

| − | + | ||

| − | == | + | == Articulatory synthesis == |

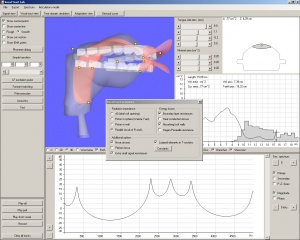

| − | + | [[File:Articulatory synthesis model 1.jpg|thumbnail|right|3D model of a human vocal tract by VocalTractLab articulatory speech synthesizer]] | |

| + | Articulatory synthesis is the oldest form of speech synthesis. It tries to mimic the entire human vocal tract and the way the air passes through it when speaking. Modern synthesizer of this kind are based on the acoustic tube model such as the [[Formant synthesis]] uses. But compared to those models, articulatory synthesis uses the entire system as the parameter and the entire tubes as the controls. This allows the creation of very complex models but with increasing complexity the difficulty of effectively tracking the behaviour of the innards of the system decrease, because a small change in the tube settings propagate into complex speech patters. But this also makes articulatory synthesis attractive, because the researches do not need to model complex formant trajectories explicitly. In other words, artificial speech is produced by controlling the modelled vocal tract and not by controlling the produced sound itself.<ref>http://www.vocaltractlab.de/index.php?page=background-articulatory-synthesis</ref> | ||

| − | + | This synthesis simulates the airflow through the vocal tract of a human. Unlike [[Formant synthesis]], the entire modelled system is controlled as a whole, as it does not comprise of connected, discrete modules. By changing the characteristics of the components, tubes, of the articulatory system, different aspects of the produced voice can be achieved. | |

| − | + | ||

| − | + | Articulatory synthesis deals with two inherent problems. First, the resulting model must find balance between being precise and complex and being simplistic but observable and controllable. Because the vocal tract is very complex, models mimicking it very closely are inherently very complicated, and this makes them difficult to tract and control. This is connected to a second problem. It is important to base the model of the vocal tract on data as precise as possible. Modern screening methods such as MRI or X-Rays is used to see the human vocal tract and it's working when producing speech. This data is then used for construction of the articulatory model, but methods such as these are often intrusive and data from them are difficult to deduce. | |

| − | + | ||

| − | + | Both these obstacles diminish the quality of the speech produced by these models when compared to modern methods and articulatory synthesis was largely abandoned for the purposes of speech synthesise. It is still used, however, for the research of the human vocal tract physiology, phonology, or visualisation of the human head when speaking.(ref Taylor 417) | |

| − | + | ||

== References == | == References == | ||

Latest revision as of 13:13, 19 February 2016

Speech synthesis is the methods of generating artificial speech by mechanical means or by a computer algorithm. It it used when there is a need to communicate information acoustically, and nowadays is found in text-to-speech applications (screen text reading, assistance for the visually impaired) and virtual assistants (GPS navigation, mobile assistants such as Microsoft Cortana or Apple Siri), or in any other situation where the information usually available in text has to be passed acoustically. It often comes paired with voice recognition.

These applications require speech that is intelligible and natural-sounding. Today speech synthesis systems achieve great deal of naturalness compare to a real human voice. Yet they are still perceived as non-human because minor audible glitches still remain present in the outputted utterances. It may very well be that modern speech synthesis has reached the point in the Uncanny Valley where the closeness of the artificial speech is so near perfection that humans find it unnatural.

Contents

Historical overview

The history of speech synthesis date back to the 18th century when Hungarian civil servant and inventor Wolfgan von Kempelen created a machine of pipes and elbows and assorted parts of musical instruments. He achieved a sufficient imitation of the human vocal tract with the third iteration. He published a comprehensive description of the design in his book entitled The Mechanism of Human Speech, with a Description of a Speaking Machine in 1791.

The design was picked up by Sir Charles Wheatstone, a Victorian Era English inventor, who improved on the Kemplelen's design. The newly sparked interest into the research of phonetics and the Whetstone's work inspired Alexander Graham Bell to do his own research into the matter and eventually arrive at the idea of the telephone. Bell laboratories created the Voice Operating Demonstrator, or Voder for short, a human speech synthesizer in the 1930s. Voder was a model of a human vocal tract, basically an early version of a formant synthesizer, and required a trained operator who had to manually create utterances using a console with fifteen touch-sensitive keys and a pedal.

Digital speech synthesizer begun to emerge in the 1980s, following the MIT-developed DECtalk text-to-speech synthesizer. This synthesizer was notably used by the physicist Stephen Hawking. His website claims that he uses it even today.

In the beginnings of the modern day speech synthesis, two main approaches appeared. Formant synthesis that tries to model the entire vocal tract of a human, and articulatory synthesis which aims to create the sounds speech is made of from scratch. However, both methods are gradually replaced by concatenation synthesis nowadays. This form of synthesis uses a large set, speech corpus, of high-quality pre-recorded audio samples. These samples can be assembled together and form a new utterance.

Purpose

The purpose of speech synthesis is to model, research, and create synthetic speech for applications where communicating information via text is undesirable or cumbersome. It is used in 'giving voice' to virtual assistants and in text-to-speech, most notably as a speech aid for visually impaired people or for those who lost their own voice.

Concatenation synthesis

Concatenation synthesis is a form of speech synthesis that uses corresponding short samples of previously recorded speech to construct new utterances. These samples vary in length, ranging between 1 second to several milliseconds. These small samples are subsequently modified so they better fit together according to the type, structure, and mainly the contents of the synthesized text. (ref Tihelka dis. práce) These samples, or units, can be individual phones, diphones, morphemes, or even entire phrases. So instead of changing the characteristics of the sound to generate intelligible speech, concatenation synthesis works with a large set of pre-recorded data from which, much like a building set, builds utterances that originally were not present in the data set.[1]

The usage of real recorded sounds allows the production of very high quality artificial speech. This is advantageous in comparison to Formant synthesis as we do not require approximate models of the entire vocal tract. However, some speech characteristics, such as voice character, or mood can not be modified as easily. (ref Tihelka 10) A unit placed in a not very ideal position can result in audible glitches and unnaturally sounding speech because the contours of the unit do not perfectly fit together with the surrounding units. This problem is minimal in domain specific synthesizers but general models require large data sets and additional output control that detects and repairs these glitches.(ref Taylor 425)

First, a data set of high quality audio samples needs to be recorded and divided into units. The most common type of a unit is the diphone, but other larger or smaller units can be also used. The set of such units creates the speech corpus. Diphones inventory can be artificially created,(Taylor 425) or based on many hours of recorded speech produced by one speaker.(Tihelka 17)(Taylor 426) These being either spoken monotonously, as to create units with neutral prosody, or spoken naturally. The corpus is then segmented into discrete units. Segments such as silence or breath-ins are also marked and saved.

The speech corpus prepared in this way is then used for construction of new utterances. A large database, or in other words, a large set of units with varied prosodic and sound characteristics, should safeguard more natural-sounding speech. In Unit selection synthesis, one of the approaches Concatenation synthesis uses, the target utterance is first predicted, suitable units are picked from the speech corpus, assembled, and their suitability towards the target utterance tested.(ref. Clark & King)

Formant synthesis

Formant synthesis is the first truly crystallized method of synthesizing speech. It used to be the dominant method of speech synthesis until the 1980s. Some call it a synthesis "from scratch" or "synthesis by rule". (ref Paul Taylor p 422) In contrast to the more recent approach of concatenation synthesis, formant synthesis does not use sampled audio. Instead, the sound is created from scratch. This results in a rather unnatural sounding artificial voice but allows application where the usage of audio samples-based synthesis would be unfeasible.

The result is intelligible, clear sounding speech, but listeners immediately notice that is was not spoken by a human as the geenrated speech sounds "robotic" or "hollow". The model is too simplistic and does not accurately reflect the subtleties of a real human vocal tract which consists of many different articulation working together.

Formant synthesis uses modular, model-based approach to creating the artificial speech. Synthesizer utilizing this method rely on an observable and controllable model of an acoustic tube. This model typically has a layout of a vocal tract with two system functioning parallel. The sound is generated from a source and then fed to a vocal tract model. The vocal tract is modelled so the oral and nasal cavities are separate and parallel but only passes through one of them depending on the type of (i.e. nasalized sounds) sounds needed at the moment. Both cavities are combined on the output and pass through a radiation component that simulates the sound characteristics of real lips and nose.

TODO: Add block diagram of the basic formant synthesizer

However, this method does not represent an accurate model of the vocal tract because it allows separate and independent control over every aspect of the individual formants. But in a real vocal tract, the formant is constructed as a whole by the entire system. It is not possible to highlight one part of it and base an artificial model on it. (ibid 399)

Articulatory synthesis

Articulatory synthesis is the oldest form of speech synthesis. It tries to mimic the entire human vocal tract and the way the air passes through it when speaking. Modern synthesizer of this kind are based on the acoustic tube model such as the Formant synthesis uses. But compared to those models, articulatory synthesis uses the entire system as the parameter and the entire tubes as the controls. This allows the creation of very complex models but with increasing complexity the difficulty of effectively tracking the behaviour of the innards of the system decrease, because a small change in the tube settings propagate into complex speech patters. But this also makes articulatory synthesis attractive, because the researches do not need to model complex formant trajectories explicitly. In other words, artificial speech is produced by controlling the modelled vocal tract and not by controlling the produced sound itself.[2]

This synthesis simulates the airflow through the vocal tract of a human. Unlike Formant synthesis, the entire modelled system is controlled as a whole, as it does not comprise of connected, discrete modules. By changing the characteristics of the components, tubes, of the articulatory system, different aspects of the produced voice can be achieved.

Articulatory synthesis deals with two inherent problems. First, the resulting model must find balance between being precise and complex and being simplistic but observable and controllable. Because the vocal tract is very complex, models mimicking it very closely are inherently very complicated, and this makes them difficult to tract and control. This is connected to a second problem. It is important to base the model of the vocal tract on data as precise as possible. Modern screening methods such as MRI or X-Rays is used to see the human vocal tract and it's working when producing speech. This data is then used for construction of the articulatory model, but methods such as these are often intrusive and data from them are difficult to deduce.

Both these obstacles diminish the quality of the speech produced by these models when compared to modern methods and articulatory synthesis was largely abandoned for the purposes of speech synthesise. It is still used, however, for the research of the human vocal tract physiology, phonology, or visualisation of the human head when speaking.(ref Taylor 417)